Reason #4: File manipulation before processing For example, I had a colleague that was in the habit of removing attachments from email before running his archival routine. Other folks store their email in rich text instead of the more common html format. Like mobile email this practice creates far more attachments and changes certain fonts generating more whitespace in the body of an email. These subtle changes are likely to result in deduplication issues. Individuals have all sorts of email management styles, and sometimes those practices result in email deduplication issues.

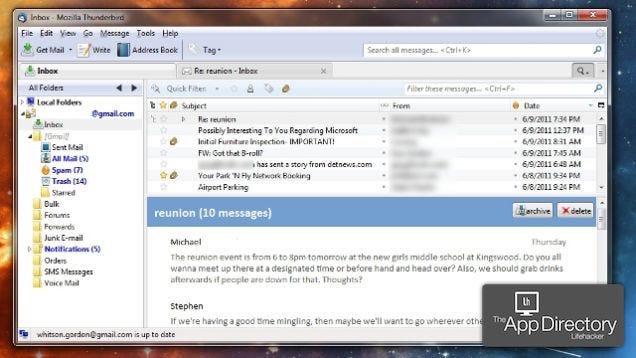

Reason #3: Custodian email management practices When collecting mobile data, we find that images and icons are commonly extracted as attachments, while that same message in enterprise email systems leaves those image files intact. Because attachments are part of the hashing algorithm, an email collected from both the phone and the exchange server are unlikely to dedupe under these conditions. These changes could be a something as simple as the addition of added white space or a hard return. We’ve found that certain characters are treated differently in different versions of Outlook. Reason #1: Enterprise email migrationĪs more organizations embark on cloud strategies, they are migrating their email from on-premise, exchange servers to cloud based Microsoft Office 365. During the migration process, some slight changes happen to a certain percentage of email files, resulting in deduplication failure. But what would cause these values to be different when collecting data across a single enterprise? While several things may cause identical records to not deduplicate, in our experience there are five reasons that are the most common. Top deduplication issuesĪs data is managed at the source, subtle changes may take place rendering deduplication practices ineffective. Although hashing algorithms don’t recognize files as duplicates, matching records continue to enter review populations leading to the review of the same file over and over.

Each processing tool performs this task differently from the next, but in most cases the metadata values include common email fields like: From To CC BCC Sent Date and Time Email Subject Email Body and attachment names. Any variation in those fields and the algorithm will result in a different hash value. Email files however are hashed by looking at a variety of extracted metadata fields and combining those values into a string that is ultimately run through the hashing algorithm. For non-email records, the system hashes the file in its entirety, creating a unique “fingerprint” from the file signature.

#Deduplicator in mailclient code#

In 2019, I am sure that most litigators and eDiscovery professionals understand the premise of deduplication. It is the process by which the processing tool gathers strings of data, converts those strings into hash codes, compares those hash code values, identifying matching records and flagging one as unique and the others as duplicates. that being said, I’m not sure everyone realizes that the process for hashing loose files and attachments is different than the process used to hash email.

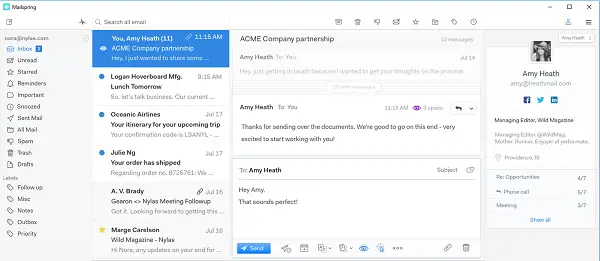

In today’s typical enterprise environment, collection points expand far beyond an exchange server and a share drive. From the cloud and mobile data to social media and chat, collection points are incredibly diverse, leading to data related issues that result in over collection and the proliferation of duplicates records.

0 kommentar(er)

0 kommentar(er)